At Ardigen our goal is to decode the microbiome for clinical success. Currently, investigations into the role of gut microbiota with connection to human health is gaining momentum, as numerous research projects and commercial ventures are being undertaken—especially in the case of cancer research. It all started with the discovery of correlations between microbial composition and therapy efficacy. Currently, the modulation of the gut microbiome by FMT has already been proven to impact treatment outcomes in patients [1, 2]. This gives hope for the development of microbiota-derived biomarkers and, ultimately, reliable therapies. To enable this, scientists are currently looking to determine the Mode of Action (MoA) of the microbiome – particular actionable features within the microbial haystack.

Keys to this discovery may be in utilisation of data from high-throughput screens. One of such screens is Shotgun Metagenome Sequencing (SMS), which provides data, enabling the understanding of functions performed by microorganisms and allowing for quantification of the low-abundant ones, for whom traditional 16S sequencing lacks depth. However, tools traditionally used in bioinformatics are not capable of fully exploiting this kind of complex data.

In microbiome research, just as in various other scientific domains, Machine Learning (ML) is getting a foothold and giving hope for the awaited breakthrough. But how common is it and how is it applied to metagenomic data? In this article, we review data science methods used in microbiome research on an example of recent papers utilizing SMS data from cancer patients undergoing immune checkpoint therapies [3-12].

The journey through microbiome with data science

Once the microbial samples leave sequencers and spectrometers, they typically end up as tables of numbers. Such data representations can be easily fed into an analytical software, be it statistical or machine learning (ML). All of the articles we have gathered for this review apply one of these two approaches.

The distinction between statistics and ML is a blurry one, as both fall into a wider field of data science. For the sake of clarity, we define (supervised) ML as a set of algorithms that predict certain outcomes on new data. For example, an ML algorithm would predict response to a therapy, based on the taxonomic data of a given patient. There are also unsupervised ML algorithms that find patterns in the data, but do not predict anything specific about the patient, these are often used for data visualization or quality control, but here we focus on the supervised methods.

On the other hand, the more traditional, statistical approach uses univariate analysis, where every patient’s feature (e.g. abundance of a specific taxa or a specific function) is separately analyzed with a statistical test. The specific test used depends on an application, so let’s take a closer look at which tests are applied in the papers that we found highly important to the field.

Statistical tests for microbiome data

In cases where the patients can be divided into groups (e.g. responders and non-responders) one can use univariate statistical tests that compare the differences between these groups by looking at each feature (say, relative abundance of specific taxa) separately and outputting a p-value that describes the significance of between-group difference. Furthermore, to decide if a certain feature is statistically different between the groups, it is necessary to define a significance threshold, traditionally a p-value lower than 0.05.

Mann-Whitney is a specific example of such a test, used in [3, 5, 7, 10] to compare the abundance of certain genera in stool samples. A similar test was used in [8] to assess the differences between gene / MGS (metagenomic species) counts (alas the authors do not provide the name of the test used). Other variants of univariate tests were used in [3, 4, 7, 10]. Overall, the authors favor the use of non-parametric tests (like the Mann-Whitney test), which are a type of univariate tests that only use the ordering of the values, which is reasonable because non-parametric tests do not carry any assumptions about distribution of the underlying data.

Also, authors of many papers [3, 7, 9, 10] apply a correction for a multiple comparison problem (one of Holm, Bonferroni, Benjamin-Hochberg), which makes accepting the null hypothesis less likely, resulting in fewer taxa or OTUs passing the significance threshold. The corrections push the reasoning in a more conservative direction, limiting the number of false positives, which, in our view, should be preferred and applied whenever required.

Ultimately, when it comes to more clinical areas of research, one of the most popular statistical tests is the log-rank (Mantel-Cox) test to assess the significance of Kaplan-Meier estimator in survival analysis [5, 6, 7, 8, 9, 12]. However, these tests are applicable only if the survival data is collected.

Machine learning in microbiome

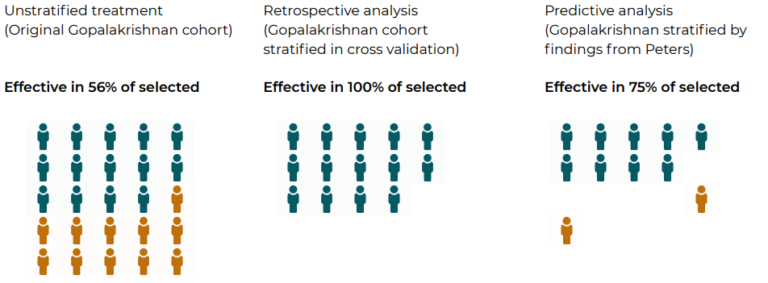

Figure 1. Application of Ardigen Microbiome Translational Platform enabled selection of patients for immunotherapy that increased the fraction of patients likely to respond to treatment.

While popular, univariate statistical tests have numerous shortcomings. For example, they work on each feature separately and may ignore a signal that manifests itself across multiple taxa, but is too weak when we look at each taxa individually. This is where a machine learning approach shines. Even the simplest ML model, like a linear regression, takes into account the influence of all the factors put together to predict the outcome. This way, we can deliver more reliable predictions and extract the relevant features in a more robust way. Once we have a trained model, we can also predict the independent variable (the variable of interest). Contrarily, in classical statistics, the notion of an independent variable occurs as well, but we do not want to predict it for new entities – it is rather used to stratify the observations in the dataset in order to characterize the subgroups separately and, eventually, compare them.

Compared with statistical methods, machine learning is not so commonly used across the reviewed articles, however there are some examples of ML-based ones.

One such example is [7], where authors trained a series of logistic regression models on binarized abundances (abundance=0 vs. abundance>0) of the taxa with the response status as dependent variable (also binary). Another example is the application of random forests in [10] to find the factors that influence the shift in gut microbiome. Ultimately, a series of machine learning algorithms has been trained in [12]. The authors implemented random forest, extra trees, SVM, elastic net and k-nearest neighbors.

One crucial part of applying ML in any context is the verification of how well the model performs. This is typically done by splitting the data into train and test sets (also called discovery and validation), where the model trained on the first set makes predictions on the other and its performance is evaluated. This should be repeated multiple times by randomly splitting the data into train and test sets to get a robust estimate of the performance. To some degree, this was done in [10, 12] to compare various models, but is too often skipped in research papers.

Taking a step back, what else influences the results?

Both machine learning and statistical results are largely influenced by the pre-processing of the data. This often prevents us from directly comparing the results presented in various papers. For example, many authors use MetaPhlAn2 to perform taxonomic profiling [3, 6, 9, 10], but the software options may differ from publication to publication. Hence, some systematic deviations are to be expected. Furthermore, in [10] a competing pipeline – MetaOMineR was used as well. Secondly, the authors tend to remove infrequent features (taxa, OTUs, pathways etc). The notion of “infrequency” is fuzzy though: some researchers are more liberal and retain variables present in as little as 10% of samples [4], whereas others are much more strict and postulate 25% of presence [6, 7].

The addition of synthetic features is also used to improve analysis. The most common of these is sample diversity (the alpha- or beta-diversities). However, even though these are commonly used, there is no consistency between researchers on what exactly should be calculated. To take alpha diversity as an example, some authors prefer Shannon diversity [7, 10, 11], while others choose its inverse [5, 12] and some choose simple richness [6, 8, 9, 10], or Simpson diversity [10].

A shorter path to biomarkers?

The field of microbiome research is only just starting to apply even the basic ML methods. Many works stop at calculating p-values and skip the weaker signals that may shed more light on the fuller biological picture that the data hides. These intricacies may be unraveled by re-analyzing the data with the skillful use of a more robust approach. This in turn may lead to a better understanding of therapies’ modes of action or the discovery of new biomarkers, and possibly even microbiome-related drugs supporting the therapy itself. That is why, we at Ardigen believe that applying ML to microbial data will increase the quality of the research and lead to breakthrough discoveries.

Here is the plain text version of the “Related Posts” section for the article “Machine Learning on its road to conquer Microbiome Research,” with the URLs shown alongside the anchor text:

Further Reading from Ardigen’s Knowledge Hub

Continue exploring how AI, ML, and advanced bioinformatics are driving breakthroughs in microbiome research and data-driven discovery:

- Davar, D., Dzutsev, A. K., McCulloch, J. A., Rodrigues, R. R., Chauvin, J. M., Morrison, R. M., Deblasio, R. N., Menna, C., Ding, Q., Pagliano, O., Zidi, B., Zhang, S., Badger, J. H., Vetizou, M., Cole, A. M., Fernandes, M. R., Prescott, S., Costa, R. G. F., Balaji, A. K., … Zarour, H. M. (2021). Fecal microbiota transplant overcomes resistance to anti-PD-1 therapy in melanoma patients. Science, 371(6529), 595–602. https://doi.org/10.1126/science.abf3363

- Baruch, E. N., Youngster, I., Ben-Betzalel, G., Ortenberg, R., Lahat, A., Katz, L., Adler, K., Dick-Necula, D., Raskin, S., Bloch, N., Rotin, D., Anafi, L., Avivi, C., Melnichenko, J., Steinberg-Silman, Y., Mamtani, R., Harati, H., Asher, N., Shapira-Frommer, R., … Boursi, B. (2021). Fecal microbiota transplant promotes response in immunotherapy-refractory melanoma patients. Science, 371(6529), 602–609. https://doi.org/10.1126/science.abb5920

- Frankel, A. E., Coughlin, L. A., Kim, J., Froehlich, T. W., Xie, Y., Frenkel, E. P., & Koh, A. Y. (2017). Metagenomic Shotgun Sequencing and Unbiased Metabolomic Profiling Identify Specific Human Gut Microbiota and Metabolites Associated with Immune Checkpoint Therapy Efficacy in Melanoma Patients. Neoplasia (New York, N.Y.), 19(10), 848–855. https://doi.org/10.1016/j.neo.2017.08.004

- Matson, V., Fessler, J., Bao, R., Chongsuwat, T., Zha, Y., Alegre, M. L., Luke, J. J., & Gajewski, T. F. (2018). The commensal microbiome is associated with anti-PD-1 efficacy in metastatic melanoma patients. Science, 359(6371), 104–108. https://doi.org/10.1126/science.aao3290

- Gopalakrishnan, V., Spencer, C. N., Nezi, L., Reuben, A., Andrews, M. C., Karpinets, T. V, Prieto, P. A., Vicente, D., Hoffman, K., Wei, S. C., Cogdill, A. P., Zhao, L., Hudgens, C. W., Hutchinson, D. S., Manzo, T., Petaccia de Macedo, M., Cotechini, T., Kumar, T., Chen, W. S., … Wargo, J. A. (2018). Gut microbiome modulates response to anti-PD-1 immunotherapy in melanoma patients. Science (New York, N.Y.), 359(6371), 97–103. https://doi.org/10.1126/science.aan4236

- Peters, B. A., Wilson, M., Moran, U., Pavlick, A., Izsak, A., Wechter, T., Weber, J. S., Osman, I., & Ahn, J. (2019). Relating the gut metagenome and metatranscriptome to immunotherapy responses in melanoma patients. Genome Medicine, 11(1). https://doi.org/10.1186/s13073-019-0672-4

- Wind, T. T., Gacesa, R., Vich Vila, A., de Haan, J. J., Jalving, M., Weersma, R. K., & Hospers, G. A. P. (2020). Gut microbial species and metabolic pathways associated with response to treatment with immune checkpoint inhibitors in metastatic melanoma. Melanoma Research, 30(3), 235–246. https://doi.org/10.1097/CMR.0000000000000656

- Routy, B., Le Chatelier, E., Derosa, L., Duong, C. P. M. M., Alou, M. T., Daillère, R., Fluckiger, A., Messaoudene, M., Rauber, C., Roberti, M. P., Fidelle, M., Flament, C., Poirier-Colame, V., Opolon, P., Klein, C., Iribarren, K., Mondragón, L., Jacquelot, N., Qu, B., … Zitvogel, L. (2018). Gut microbiome influences efficacy of PD-1-based immunotherapy against epithelial tumors. Science (New York, N.Y.), 359(6371), 91–97. https://doi.org/10.1126/science.aan3706

- Cvetkovic, L., Régis, C., Richard, C., Derosa, L., Leblond, A., Malo, J., Messaoudene, M., Desilets, A., Belkaid, W., Elkrief, A., Routy, B., & Juneau, D. (2020). Physiologic colonic uptake of 18F-FDG on PET/CT is associated with clinical response and gut microbiome composition in patients with advanced non-small cell lung cancer treated with immune checkpoint inhibitors. European Journal of Nuclear Medicine and Molecular Imaging, 1–10. https://doi.org/10.1007/s00259-020-05081-6

- Derosa, L., Routy, B., Fidelle, M., Iebba, V., Alla, L., Pasolli, E., Segata, N., Desnoyer, A., Pietrantonio, F., Ferrere, G., Fahrner, J.-E., Le Chatellier, E., Pons, N., Galleron, N., Roume, H., Duong, C. P. M., Mondragón, L., Iribarren, K., Bonvalet, M., … Zitvogel, L. (2020). Gut Bacteria Composition Drives Primary Resistance to Cancer Immunotherapy in Renal Cell Carcinoma Patients. European Urology, 78(2), 195–206. https://doi.org/10.1016/j.eururo.2020.04.044

- Salgia, N. J., Bergerot, P. G., Maia, M. C., Dizman, N., Hsu, J. A., Gillece, J. D., Folkerts, M., Reining, L., Trent, J., Highlander, S. K., & Pal, S. K. (2020). Stool Microbiome Profiling of Patients with Metastatic Renal Cell Carcinoma Receiving Anti–PD-1 Immune Checkpoint Inhibitors. European Urology, 78(4), 498–502. https://doi.org/10.1016/j.eururo.2020.07.011

- Peng, Z., Cheng, S., Kou, Y., Wang, Z., Jin, R., Hu, H., Zhang, X., Gong, J., Li, J., Lu, M., Wang, X., Zhou, J., Lu, Z., Zhang, Q., Tzeng, D. T. W., Bi, D., Tan, Y., & Shen, L. (2020). The Gut Microbiome Is Associated with Clinical Response to Anti–PD-1/PD-L1 Immunotherapy in Gastrointestinal Cancer. Cancer Immunology Research, 8(10), 1251–1261. https://doi.org/10.1158/2326-6066.cir-19-1014